How security of WhatsApp can be compromised?

May 14, 2025Docker Demystified: Your Simple Guide to Containers, Images, and More

Ever heard developers talking about “Docker” and felt like they were speaking a different language? You’re not alone! Docker has become a huge buzzword in software development and DevOps, but what exactly is Docker? If you’re a beginner looking for a simple Docker tutorial, you’ve come to the right place. Let’s break down the basics in plain English.

What's All the Buzz About Docker?

Imagine you’re cooking a really complex dish. You have the perfect recipe (your application code), specific, high-quality ingredients (libraries and dependencies), and a meticulously set-up kitchen (your development environment). It works perfectly in your kitchen. But what happens when you try to cook the same dish in a friend’s kitchen? Maybe they don’t have the right pan, a specific spice, or their oven temperature is off. Suddenly, your perfect dish is a disaster.

This is a classic problem in software: an application works flawlessly on a developer’s laptop (“it works on my machine!”) but breaks when moved to a testing environment or deployed to production servers. What is Docker, then? Think of Docker as a magical kitchen supplier. It provides special boxes called containers that package your entire kitchen setup – the recipe (code), all the ingredients (dependencies), and even the specific oven settings (environment configurations) – into one neat, standardized package. This package can then be shipped and run anywhere – another developer’s machine, a test server, the cloud – and the dish (your application) will come out exactly the same every single time.

Why should you care? Because Docker solves that frustrating “it works on my machine” problem, speeds up development, simplifies deployment, and makes collaboration much smoother. It’s a fundamental tool for modern software development.

The Old Days: Why We Needed Something Like Docker

Before containerization became popular, setting up and managing application environments was often painful. Developers might install different versions of libraries or system tools on their machines compared to the production servers. Ensuring consistency was a constant battle.

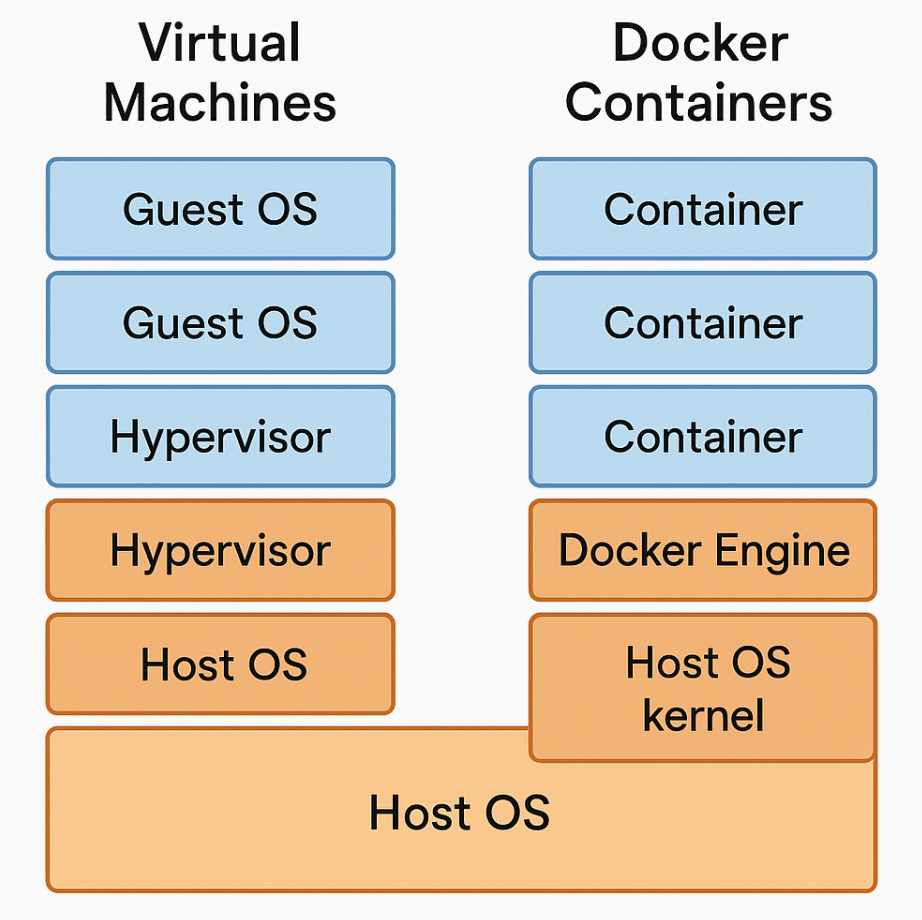

One earlier solution was Virtual Machines (VMs). VMs are like having completely separate computers running on top of your main computer. Each VM includes a full copy of an operating system, along with the application and its dependencies. While VMs provide isolation, they are heavy and consume significant resources (CPU, RAM, disk space) because each one needs that entire guest OS. Running multiple VMs could quickly bog down a server. This is a key difference in the Virtualization vs Containerization debate.

Docker Containers: Your App in a Magic Box

This brings us to Docker Containers. Unlike VMs, containers don’t bundle a full operating system. Instead, they share the host machine’s operating system kernel while keeping the application and its dependencies isolated within the container.

Think of it like an apartment building versus individual houses. VMs are like separate houses, each with its own foundation, plumbing, and roof (the full OS). Containers are like apartments within a single building. Each apartment is private and self-contained (isolated environment with app and dependencies), but they all share the building’s main foundation and infrastructure (the host OS kernel). This shared kernel approach makes containers incredibly lightweight, fast to start, and resource-efficient. This is one of the major Docker benefits.

Docker Images: The Recipe for Your Container

So, how do you create these magic boxes (containers)? You start with a Docker Image. If a container is the running instance of your application (the cooked dish), the Docker Image is the blueprint or template used to create it (the detailed recipe and pre-packaged ingredient kit).

A Docker Image is a read-only file containing everything needed to run a piece of software: the application code, a runtime (like Node.js or Python), system tools, libraries, and settings. When you want to run your application, you tell Docker to launch a container from that specific Image.

Where do these images come from? Often, you’ll use pre-built images available on Docker Hub, which is like a giant online library or registry for Docker Images. You can find official images for databases (like MySQL, PostgreSQL), programming languages (Python, Java), web servers (Nginx, Apache), and much more. You can also create and share your own custom images.

- FROM: Which base image to start with (e.g., FROM python:3.9-slim).

- WORKDIR: Set the working directory inside the image.

- COPY: Copy files from your computer into the image (e.g., COPY . . to copy your application code).

- RUN: Execute Docker commands during the image build process (e.g., RUN pip install -r requirements.txt to install Python dependencies).

- EXPOSE: Inform Docker which network ports the container will listen on.

- CMD: Specify the default command to run when a container is started from this image (e.g., CMD [“python”, “app.py”]).

By writing a Dockerfile, you automate the process of creating your application’s environment, ensuring consistency every time the image is built.

Docker Volumes: Don't Lose Your Data!

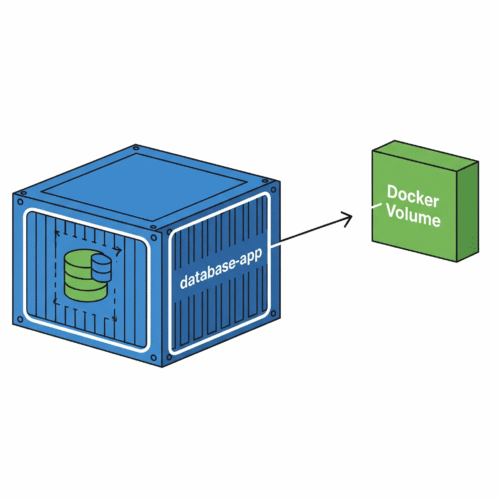

Containers are designed to be ephemeral, meaning they can be stopped, destroyed, and replaced easily without much fuss. This is great for stateless applications, but what about applications that need to store data persistently, like databases or applications that handle user uploads?

If you store data directly inside a container’s writable layer, that data is lost when the container is removed. This is where Docker Volumes come in. A Docker Volume is the preferred mechanism for persisting data generated by and used by Docker containers. Volumes are managed by Docker, stored on the host machine (outside the container’s filesystem), and their lifecycle is independent of the container’s lifecycle.

Think of a Docker Volume like an external USB drive you plug into your container. You can read and write data to it from within the container. If the container is stopped or deleted, the data on the “USB drive” (the Volume) remains safe and can be attached to a new container later.

Docker Compose: Juggling Multiple Containers

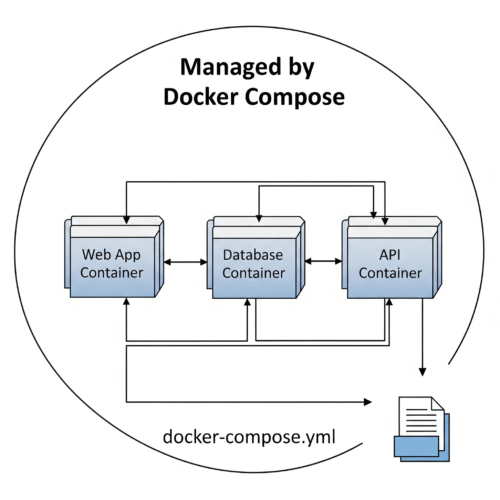

Simple applications might run in a single container, but most real-world applications are more complex. They often consist of multiple interconnected services – perhaps a web frontend, a backend API, a database, and a caching service. Managing the setup, linking, and running of all these individual containers manually using basic Docker commands can become cumbersome.

Enter Docker Compose. Docker Compose is a tool specifically designed for defining and running multi-container Docker applications. You use a simple configuration file written in YAML (usually named docker-compose.yml) to define all the services that make up your application.

In this file, you specify which Docker Image each service should use, how they should be networked together, which ports to expose, which Docker Volumes to attach, and other configuration details. Then, with a single command (docker-compose up), Docker Compose reads your file and starts, stops, and manages all the containers for your application as a single unit.

Getting Your Feet Wet: Trying Docker

Ready to try Docker? The best way to start is by installing Docker Desktop (available for Windows, macOS, and Linux) from the official Docker website. The Docker installation process is usually straightforward.

Once installed, you can open your terminal or command prompt and run the classic first command:

docker run hello-world

This simple command downloads a tiny test Docker Image (if you don’t have it already) and runs it in a container. If it prints a “Hello from Docker!” message, your installation is working! This is the first step in many a Docker tutorial.

Conclusion: Why Docker Rocks for Beginners (and Pros!)

So, what is Docker? It’s a powerful platform that simplifies how we build, ship, and run applications using containers. For beginners, it lowers the barrier to entry for understanding application environments and deployment. For experienced developers and DevOps teams, it provides consistency, efficiency, and scalability.

The key takeaways and Docker benefits are:

- Consistency: Applications run the same way everywhere.

- Efficiency: Containers are lightweight and fast.

- Isolation: Apps and their dependencies are packaged together.

- Simplicity: Tools like Dockerfile and Docker Compose streamline complex processes.

- Collaboration: Easier to share environments and applications.

Learning Docker opens up a world of possibilities in modern software development. While we’ve only scratched the surface with concepts like Docker Image, Docker Volume, and Containerization, hopefully, this Docker for beginners guide has demystified the basics. Don’t be afraid to dive deeper and start experimenting!