The AI Infrastructure Boom: From Nokia’s $4B US Bet to Foxconn’s Supercomputer Cluster

November 23, 2025

EU Digital Omnibus: Is Europe Quietly Dialling Back Data and AI Rules?

December 9, 2025How I Recovered My Proxmox Ceph Cluster After Multiple OSD Failures

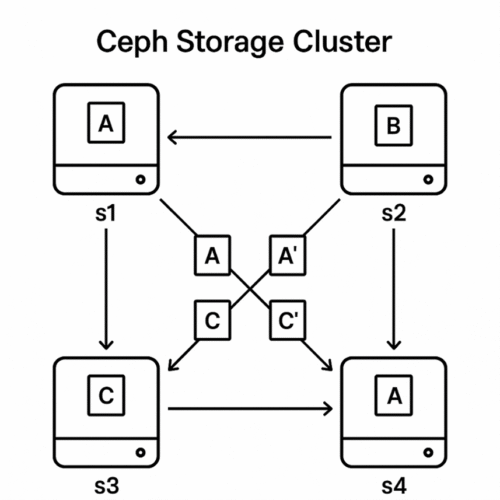

When my Proxmox Ceph cluster went into HEALTH_WARN and three OSDs crashed, every virtual machine in my lab was at risk. Only one OSD was still holding all the data. If that single disk died, everything would have gone with it.

This article explains, how I:

- Brought all Ceph OSDs back online

- Let Ceph rebalance the data

- Increased the replication size so I am no longer running with a single risky copy

It’s a simple, real-world Ceph OSD recovery story that should help anyone running Proxmox with Ceph storage.

What Went Wrong: Ceph HEALTH_WARN and Single Replica Risk

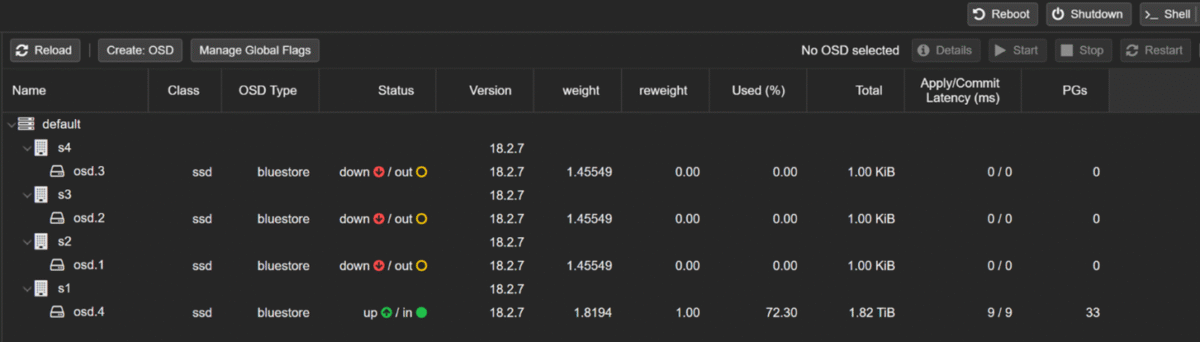

My starting point looked like this:

ceph -s

- HEALTH_WARN

- Only 1 OSD up and in (for example, osd.4)

- Pool DiskPool had size 1, min_size 1

- All VM disks were stored on that one remaining OSD

In other words:

- No redundancy, every RBD image (VM disk) existed on a single physical disk.

- If that disk failed, Ceph had no other copies. The cluster would not be able to recover that data.

For Ceph newbies, the important terms are:

- size – how many copies of each object Ceph keeps.

- size 1 = one copy only (very risky).

- size 2 = two copies (safer).

- min_size – minimum number of copies that must be available for Ceph to serve I/O.

- With min_size 1, Ceph will still read/write even with a single copy.

- With min_size 2, Ceph refuses I/O if it cannot guarantee at least two copies.

I was running DiskPool size 1 / min_size 1, so the cluster was “working” but one more disk failure would have meant complete data loss.

Step-by-Step: How I Recovered the Ceph Cluster

Always make sure you have good backups before doing any Ceph surgery.

1. Safely remove the broken OSDs from the cluster

- On each node with a failed OSD (for example osd.1, osd.2, osd.3), I:

ceph osd out <id>

ceph osd crush remove osd.<id>

ceph auth del osd.<id>

ceph osd rm <id>

systemctl stop ceph-osd@<id>

This tells Ceph:

These OSDs are gone. Stop trying to use them.

On lsblk I could still see the OSD block devices (for example /dev/sdb mapped to a Ceph LV), but they were now free to be re-used.

2. Recreate fresh OSDs on the same drives

On each node (s2, s3, s4), I created a brand-new OSD using ceph-volume:

ceph-volume lvm create –data /dev/sdb

Ceph did the following automatically:

- Created a new VG and LV for the OSD block

- Generated a new OSD ID (0, 1, 2, etc.)

- Wrote a fresh Bluestore filesystem

- Enabled and started ceph-osd@<id>.service

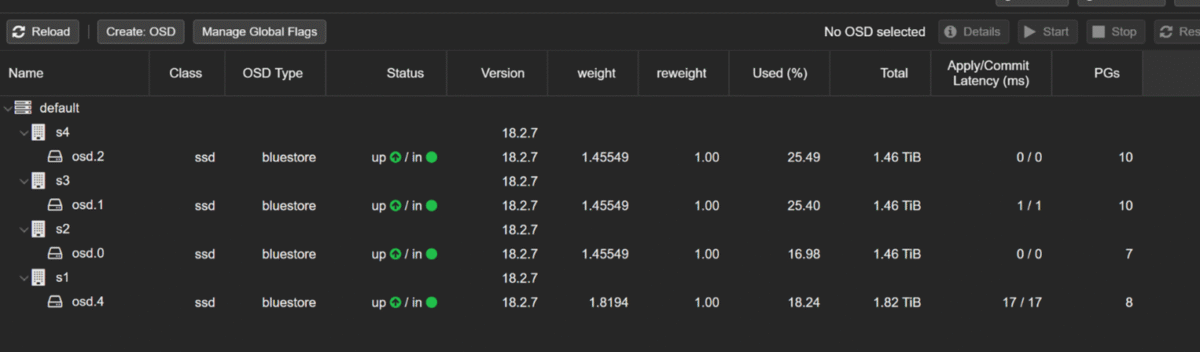

After doing this on all three nodes, my ceph osd tree looked like:

ceph osd tree

4 OSDs: osd.0, osd.1, osd.2, osd.4

All up and in

3. Allow Ceph to rebalance and backfill

Earlier, I had set some safety flags during the disaster:

- noout – stops Ceph from marking OSDs as out

- nobackfill and norecover – stops rebalancing and recovery

Once the fresh OSDs were ready, I removed those flags:

ceph osd unset noout

ceph osd unset nobackfill

ceph osd unset norecover

Ceph immediately started backfilling and remapping PGs to spread data from the full OSD to the new ones. During this time, ceph -s showed:

- Many PGs in active+remapped+backfill_wait / backfilling

- High recovery bandwidth in MiB/s

I just let it run. Depending on your network and disk speed, this can take hours.

4. Increase pool size to get real redundancy

Once everything was stable and all OSDs were up, I changed the pool replication:

- ceph osd pool set DiskPool size 2

- ceph osd pool set DiskPool min_size 2

This did two important things:

- size 2 – Ceph now keeps two copies of every object in DiskPool.

- min_size 2 – Ceph will not serve I/O if it cannot guarantee two copies.

This protects you from silent “single copy” mode. If one OSD disappears, Ceph will complain loudly instead of quietly running with no redundancy.

After the rebalancing finished, my final ceph -s looked like:

health: HEALTH_OK

osd: 4 osds: 4 up, 4 in

pools: 33 pgs

pgs: 33 active+clean

And:

ceph osd pool ls detail | grep -A3 DiskPool

pool 2 'DiskPool' replicated size 2 min_size 2 ...

Now every VM disk stored on DiskPool has two copies on different OSDs.

Key Lessons for Anyone Running Proxmox and Ceph

- Don’t run production workloads with Ceph pool size 1 – it’s basically no better than a single disk.

- Always check ceph osd pool ls detail to see your size and min_size values.

- After OSD failures, it’s often safer to remove and recreate OSDs than fight with heavy corruption.

- Let Ceph handle backfill and recovery; do not rush or interrupt unless absolutely necessary.

By rebuilding the failed OSDs and increasing the pool size to 2, my Proxmox Ceph storage moved from “one disk away from disaster” to a proper redundant Ceph cluster.